BASIC tokenization examined

If you’re in the habit of transferring BASIC files from old computers to modern ones, you might discover strange characters in the files. They’re like a strange combination of text and binary. This is not a compiled program, however. In old-school BASIC, there was a difference between compilation and tokenization.

Compilation converted BASIC code to machine code, and compiled files would usually be stored with a file extension indicating that the code should be run directly rather than interpreted. Often, this extension was some variation of “.BIN”. On personal computers at least, compilation usually required third-party software to convert the BASIC statements to machine code.

Compiled programs were no longer BASIC. They were machine code. They couldn’t be listed or edited, at least in BASIC. It generally wasn’t possible to convert a compiled BASIC program back to the original BASIC. Like any other compiled software, if you wanted to recompile it you needed to keep the source code on hand.

The default BASIC on these personal computers were also not saved as straight ASCII. While saving to text was usually an option, the default for most BASICs was to tokenize programs both in memory and on disk. Tokenized files were usually saved with some variation of a .BAS file extension—often the very same extension used for straight ASCII, non-tokenized files. Whether a file was tokenized or non-tokenized was a bit in the directory listing for that file, not in the file itself.

Unlike compiling a program, which translates code statements and functions into machine language, a tokenized BASIC program is still BASIC. In the computers I used, tokenization was mostly, if not completely, a one-to-one translation of BASIC statement/function to the one- or two-byte token for that statement or function. This saved space on the system. Both disk space and RAM were limited on older personal computers. But it also made it much easier for the system to run the code on the fly—interpret it—and made the interpretation much faster.

Without tokenization, the difference between RESET and RESTORE in the Radio Shack Color Computer’s Extended Color BASIC, for example, won’t show up until comparing the fourth character. With tokenization, the difference shows up on comparing the first character—9D is the tokenization for RESET and 8F is the tokenization for RESTORE. Nor is there any reason to scan for the end of the statement or function. Each statement or function is at most two bytes.

Detokenization, or conversion from the tokens to the textual representation of the statement, simply reversed the process. This reversal would have been performed every time the user listed a program. On a modern computer, a detokenization program should be able to be easily written in just about any modern scripting language. As long as you know the format, detokenization is just a matter of going through the tokenized file byte-by-byte and converting tokens back to their equivalent BASIC statement or function.

Here’s an example of tokenization; I’m using the TRS-80 Color Computer’s Extended Color BASIC because I have the tools easily available to tokenize it, but the basic idea will be the same for most old-school BASICs.

Let’s take a look at a very simple, and typical, intro-to-BASIC program:

- 10 CLS

- 20 INPUT "NAME";A$

- 30 PRINT "HELLO, ";A$;"!"

- 40 GOTO 10

I tokenized it using decb copy -b -t hello.txt HELLO.BAS, and then did a simple hex dump of the tokenized file using hexdump HELLO.BAS:1

0000000 26 04 00 0a 9e 00 26 14 00 14 89 20 22 4e 41 4d 0000010 45 22 3b 41 24 00 26 2b 00 1e 87 20 22 48 45 4c 0000020 4c 4f 2c 20 22 3b 41 24 3b 22 21 22 00 26 35 00 0000030 28 81 a5 20 31 30 00 00 00 0000039

The first two characters (26 04) are the address of the next line; when detokenizing from a file you would probably ignore these addresses if your particular language uses them. They’re mainly for running the code: if you have GOTO 60 in a line, the interpreter can find line 60 without having to interpret tokens to get there. It just has to jump from line to line until it hits a line with the number 60.

The second two characters are the line number: 000A is 10. The next character, 9E, is the tokenization of CLS. Then, 00 marks the end of the line.

The next line (line 20) again begins with two bytes indicating the address (26 14) of the line that comes after it.

After 26 14, there’s 00 14. That’s line 20: 14 in hexadecimal is 20 in decimal. Then, 89 is the token for INPUT. Then, 20 is a space; 22 is a quote, 4E 41 4D 45 is the word “NAME”, and 22 is the closing quote. Statements and functions are tokenized; the rest remains ASCII. Finally, 3B is the semicolon, 41 is the letter A, and 24 is the dollar sign that marks the variable as a string. And 00 marks the end of line 20.

Do the math, and line 20 takes up 2614-2604 (remember, it’s hexadecimal) bytes, for 16 bytes. Let’s add them up

| 2 bytes | the next line’s address |

| 2 bytes | the line number |

| 1 byte | the tokenization of INPUT

|

| 1 byte | the space between INPUT and its prompt

|

| 6 bytes | the text “NAME”, including the two quotes |

| 1 byte | the semicolon |

| 2 bytes | the variable A$ |

That’s 15 bytes. One’s missing, and that’s the end-of-line marker. Add one for the end-of-line zero, and we have 16 bytes.

Using this format we can write a line-aware hexdump that will make it easier to see the correspondence between each line and its PEEKable (and POKEable, if you’re daring) hex values. I wrote basicDump (Zip file, 3.8 KB) in Perl, but it could be done in any language. Using basicDump HELLO.BAS I get the following output:

10 0004 9e .. 20 000A 89 20 22 4e 41 4d 45 22 3b 41 24 . "NAME";A$. 30 001A 87 20 22 48 45 4c 4c 4f 2c 20 22 3b 41 24 3b 22 . "HELLO, ";A$;" 002A 21 22 !". 40 0031 81 a5 20 31 30 .. 10.

By default, the script doesn’t show the hex values for the next line address or the line number; if you want to see them, use the --raw switch.

Using decb the address offset is always 9726. I wouldn’t be surprised if different configurations of real hardware have different offsets, especially since there’s a memory location that holds the start of BASIC.2

Knowing that the first two bytes of each line are the address to the next line, and the next two bytes are the line number itself, here’s how to get the maximum line number in a string of tokenized code:

[toggle code]

- #get the highest line number in the tokenized code

-

sub maximumLine {

- my $code = shift;

- $address = $baseOffset;

- my $line = 0;

-

do {

- my $newLine = bytesToDecimal(substr($code, $address-$baseOffset+2, 2));

- $address = bytesToDecimal(substr($code, $address-$baseOffset, 2));

- $line = $newLine if $address;

- } while ($address);

- return $line;

- }

The same technique could just as well detokenize the BASIC file to provide a listing. Use basicDump HELLO.BAS --list to see it. The script will print back the text version of the code. It does this using an array of tokens.3 There are both single-character and double-character tokenizations. Except for GOTO and GOSUB, statements are single bytes. The two GO statements start with byte 129. In this sample program, only GOTO has a two-byte tokenization.4 Functions are two-byte tokens that start with byte 255.

Here’s the main loop. It just goes through the code, using the next line address to dump the code line by line.

[toggle code]

- #read the code

- $code = do { local $/; <> };

- $baseOffset = 9726;

- #maximum line number size

- $maxLineNumberWidth = length(maximumLine($code));

- #loop through each line of code

- $cumulatedOffset = 4;

-

while (length($code)) {

- #read the next line address

- ($nextLineBytes, $nextLine) = byteTwo();

- $nextLine -= ($baseOffset + $cumulatedOffset);

- ($lineNumberBytes, $lineNumber) = byteTwo();

- exit if $lineNumber == 0;

-

if (printableLine($lineNumber)) {

- #start printing line number

- printf "%${maxLineNumberWidth}i\t", $lineNumber;

- #print the code for this line

- $lineCode = substr($code, 0, $nextLine-1);

- $lineCode .= substr($code,$nextLine-1, 1) if $raw;

-

if ($detokenize) {

- detokenizeLine($lineCode);

-

} else {

- $lineCode = "$nextLineBytes$lineNumberBytes$lineCode" if $raw;

- $address = $cumulatedOffset;

- $address -= 4 if $raw;

- dumpLine($address, $lineCode);

- }

- #and, end the line

- print "\n";

- }

- #prepare for next line by removing the line we just went through

- $code = substr($code, $nextLine);

- $cumulatedOffset += $nextLine+4;

- }

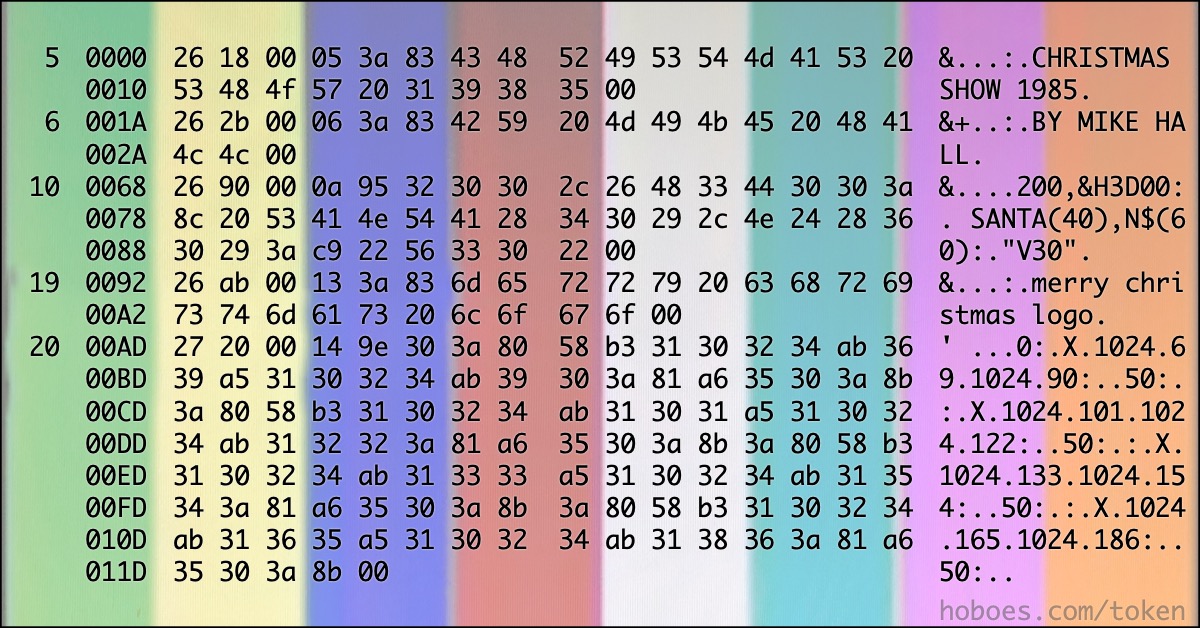

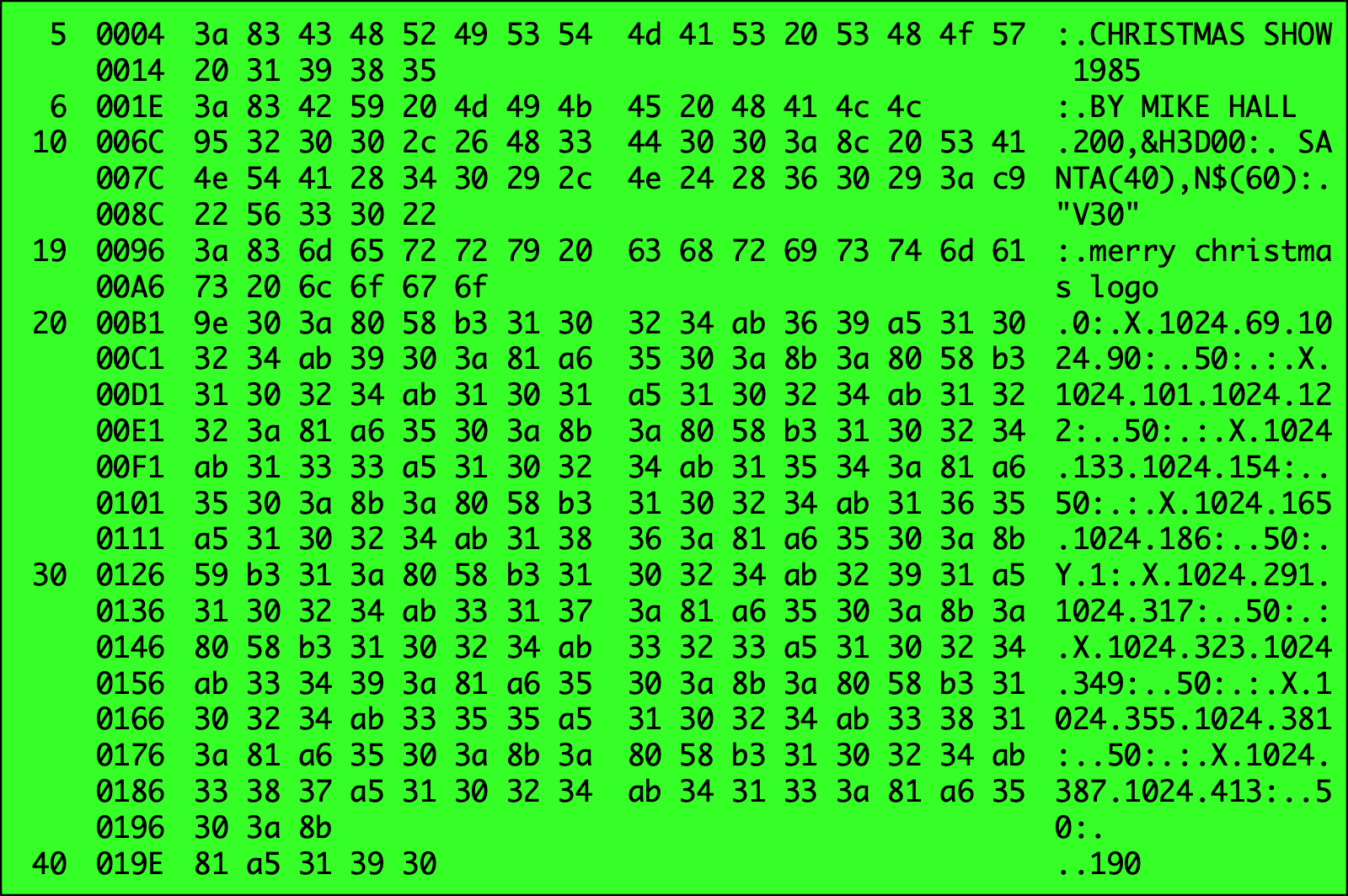

Here’s an example dump from a real program, Mike Hall’s XMASSHOW.BAS from “Christmas Pageantry” in the December, 1985 issue of The Rainbow.

I generated that using basicDump XMASSHOW.BAS 5 6 10-49. If you provide line numbers, the script will limit the dump to those line numbers. You can specify one range and any amount of single lines.

If you take a close look, you might notice something I learned from this very hexdump. Remarks can be added to BASIC in two ways: with the REM statement and with a single apostrophe. The REM-as-apostrophe has a tokenization code of hexadecimal 83, which you can see in the first and second lines. But it’s not the first character of the first and second lines, even though it’s the first character of the BASIC code of each of those lines. The first character is 3A. As you can see in the ASCII portion of the dump, 3A is the ASCII code for a colon.

Extended Color BASIC always adds a colon in front of REM-as-apostrophe; it removes the colon when listing a file. Even if the code already contains a colon before the apostrophe, ECB still adds one.5 This means that while the apostrophe takes up two fewer characters on the screen, it takes up double the space in RAM. A normal remark is tokenized as the single character 82; for all practical purposes the apostrophe is tokenized as the two characters 3A 83—the 3A being the invisible colon.

I’m not sure how useful this script is, but it was a fun example of how to navigate a tokenized BASIC program. And it did highlight an interesting quirk of the Color Computer’s BASIC. It would probably also have helped track down the very annoying issue typing in Le Lutin from The Rainbow had I had the script then.

The script does not (currently) handle weird DATA elements when producing a detokenized list. It does handle weird strings and weird remarks; all three would be possible using POKE to alter a BASIC program to contain, for example, graphics characters. My purpose was not to write a full detokenizer, since there are already tools that do this easily for the Color Computer. But the script does show how easy it is to manipulate tokenized BASIC.

You may find this helpful for other old BASICs that might not have such tools. And it may also be helpful for creating tools that modify tokenized code and rewrite a new tokenized file. The format is simple enough that as long as you keep track of the address of the next line, you should be able to create tokenized files easily in new scripts.

This is how compressed BASIC files were created. Spaces could be removed from tokenized files that couldn’t be removed by editing the lines manually. ONAGOTO100,200,300 is illegal, because the tokenizer can’t tell whether the variable is A followed by the statement GOTO or if it’s the variable AGOTO. When typing it, Extended Color BASIC requires a space between A and GOTO.

But once the GOTO is tokenized, it’s obvious that it isn’t part of the variable name, so the space can be removed by utility programs, usually machine language. The utility would have to keep track of the number of bytes removed and update the subsequent line number addresses appropriately.

You might wonder, how many spaces can you really remove that you couldn’t remove while typing, and the answer is—not many. But when you’ve only got 16 kilobytes or less every byte counts.

Update March 8, 2023: updated basicDump to support files that include the disk flag, such as files created via Theodore (Alex) Evans’s basic_utils for CoCo. With help from Allen Huffman.

In response to TRS-80 Color Computer Programming Tools: The TRS-80 Color Computer was a fascinating implementation of the 6809 computer chip, and was, from the Color Computer 1 through 3, possibly the longest-running of the old-school personal computers.

The hexdump command-line program is available by default on most Unix-like systems, including macOS and Linux.

↑Addresses 25 and 26 contain the start of the BASIC program. See page 2 of Bob Russell’s Color Computer Memory Map. It may be that this is the default start of BASIC in Disk Extended Color BASIC; the default start of BASIC in Extended Color BASIC is 7680. These also change depending on how many graphics pages you PCLEAR—PCLEAR 1 moves the start of BASIC down to 3072. (According to Richard A. White in “Marrying Machine Language To BASIC” in The Rainbow, May, 1984.)

↑I took the token codes from pages 24, 25, 39, 40, 47, and 48 of Bob Russel’s Color Computer Memory Map.

↑Technically,

↑GOTOandGOSUBdo not consist each of one two-byte tokenization, but of two one-byte tokenizations. You can just as well write them with spaces:GO TOandGO SUB. TheGOis tokenized as 129, and theTOorSUBas 165 or 166.Offhand, it looks like ECB also always adds a colon in front of

↑ELSEstatements.

- (Color Computer) basic_utils: Theodore (Alex) Evans

- “A collection of python scripts for processing BASIC program files for vintage computers. Supported machines are Tandy/Radio Shack Color Computer 1, 2, and 3 and Dragon 32/64.”

- basicDump (Zip file, 3.8 KB)

- Dump TRS-80 Color Computer tokenized BASIC line by line.

- Color Computer Memory Map: Bob Russell at TRS-80 Color Computer Archive

- “This memory map has been created after many hours of research, investigation and experimentation.”

- New, improved rcheck+ for Rainbow Magazine code

- I’ve added several features to rcheck, including verifying the checksum against an expected checksum, and providing possible reasons for checksum discrepancies.

- The Rainbow Magazine December 1985 at Internet Archive

- The 1985 Christmas issue contains, as usual, some nice graphics and sound for the season.

- ToolShed (os9 & decb)

- “ToolShed brings you the ease and speed of developing these programs to your modern personal computer running Windows, Mac OS X or Linux. The tools in the shed consist of a relocatable macro assembler/linker and intermediate code analyzer, a stand-alone assembler, an OS-9/Disk BASIC file manager and more.”

More BASIC

- Simple game menu for the Color Computer 2 with CoCoSDC

- This simple menu provides one screen for cartridges saved in the CoCoSDC’s flash ROM, and any number of screens for your favorite games for your friends to play.

- Read BASIC out loud

- Reading BASIC out loud is a great tool for verifying that what you’ve typed in from an old-school magazine or book is correct.

- Convert PCBASIC code to TRS-80 Extended Color BASIC

- If you have a book of code written in PCBASIC, it usually isn’t hard to convert it to other Microsoft BASICs, such as on the TRS-80 Color Computers.

- SuperBASIC for the TRS-80 Color Computer

- Make BASIC Fun Again. Use loops, switches, and subroutines while writing Extended Color BASIC code for the Radio Shack Color Computer.

- Rainbow Magazine BASIC program preflight tool

- This script takes 32-character lines typed in from Rainbow Magazine BASIC listings and assembles them together into full BASIC lines, doing some rudimentary error-checking along the way.

- Six more pages with the topic BASIC, and other related pages

More Color Computer

- Simple game menu for the Color Computer 2 with CoCoSDC

- This simple menu provides one screen for cartridges saved in the CoCoSDC’s flash ROM, and any number of screens for your favorite games for your friends to play.

- Rainbow Magazine preflight tool enhanced

- I’ve added several features to the Rainbow Magazine preflight tool, including a check for references to line numbers that don’t exist.

- CoCoFest! 2021

- Forty years later, I finally make it to CoCoFest!

- What are the 8 bits in 8-bit computing?

- Retro computing is often called 8-bit computing. This is because the bytes that these computers use are composed of eight bits, and much of what the computer does is operating on these individual bits, not on the byte as a whole.

- The TRS-80 Color Computer 2

- The TRS-80/Tandy Color Computer 2 is a fascinating bit of computer history. It was the direct ancestor of the first inexpensive home Unix-like computer, the Color Computer 3.

- 19 more pages with the topic Color Computer, and other related pages

"This is how compressed BASIC files were created. Spaces could be removed from tokenized files that couldn’t be removed by editing the lines manually. ONAGOTO100,200,300 is illegal, because the tokenizer can’t tell whether the variable is A followed by the statement GOTO or if it’s the variable AGOTO. When typing it, Extended Color BASIC requires a space between A and GOTO."

In my opinion this is a very unpleasant feature of this MS BASIC implementation. Some other accomplishes it better, like the CBM BASIC branch which gives tokens precedence over variable names. In this case "AG" is not a part of any known token, the tokenizer advances, checks for GOTO, which is tokenized. The previous A is left alone. The disadvantage of this, however, is that a variable name must not contain a token name ...

Johann Klasek in Vienna, Austria at 11:17 p.m. March 14th, 2023

FxDZv