Why should everyone learn to program?

“Programming for all” is a catch-all term I use for anything about programming that I think is relevant to anyone using a computer. Mostly, I use it for simple scripts such as those in 42 Astoundingly Useful Scripts and Automations for the Macintosh. But there’s a deeper meaning more appropriate to modern life, which is that everyone uses a computer almost nonstop, even if you don’t own a desktop computer, a tablet, or a smart phone. Every time you interact with the larger world, there’s a computer involved. It’s how you get billed, it’s how you leave messages for people, it’s how the lights at the intersection work and how the votes get counted on election day.

If your refrigerator has a water filter, it uses a computer to tell you when it wants you to replace the filter, your car almost certainly has a computer that controls whether your airbag will deploy in an accident, and your washing machine likely has a computer that determines whether you’re allowed to open the lid.

Every time you find out you missed an important post in some social media platform you use, it’s because a computer algorithm did not display it in your feed—and that’s because a programmer decided on that algorithm. Every time you’re asked to reduce your electricity usage, or are forced to endure rolling blackouts, it is because decades ago some programmers used a computer model to argue that unless we switched from reliable, stored energy sources to unreliable, intermittent sources, catastrophic weather changes would ensue.

Computer programming and computer models affect every aspect of our lives, personally and politically. They can even shut down a thriving economy for a year. Understanding the nature of programming is essential to modern life.

When I first got into computers in the late seventies, there was an assumption in the books and magazines I read that schools would soon be required to teach everyone programming. From Larry Gonick’s warning1 that “In the computer age, everyone will be required by law to memorize the powers of two, up to 210” to Peter Laurie’s• prediction2 that secretaries would evolve into mini-programmers, writing “small programs in Pascal or BASIC” on the server to manage the office, the assumption was that well into the future if you wanted to use a computer you’d have to write your own software.

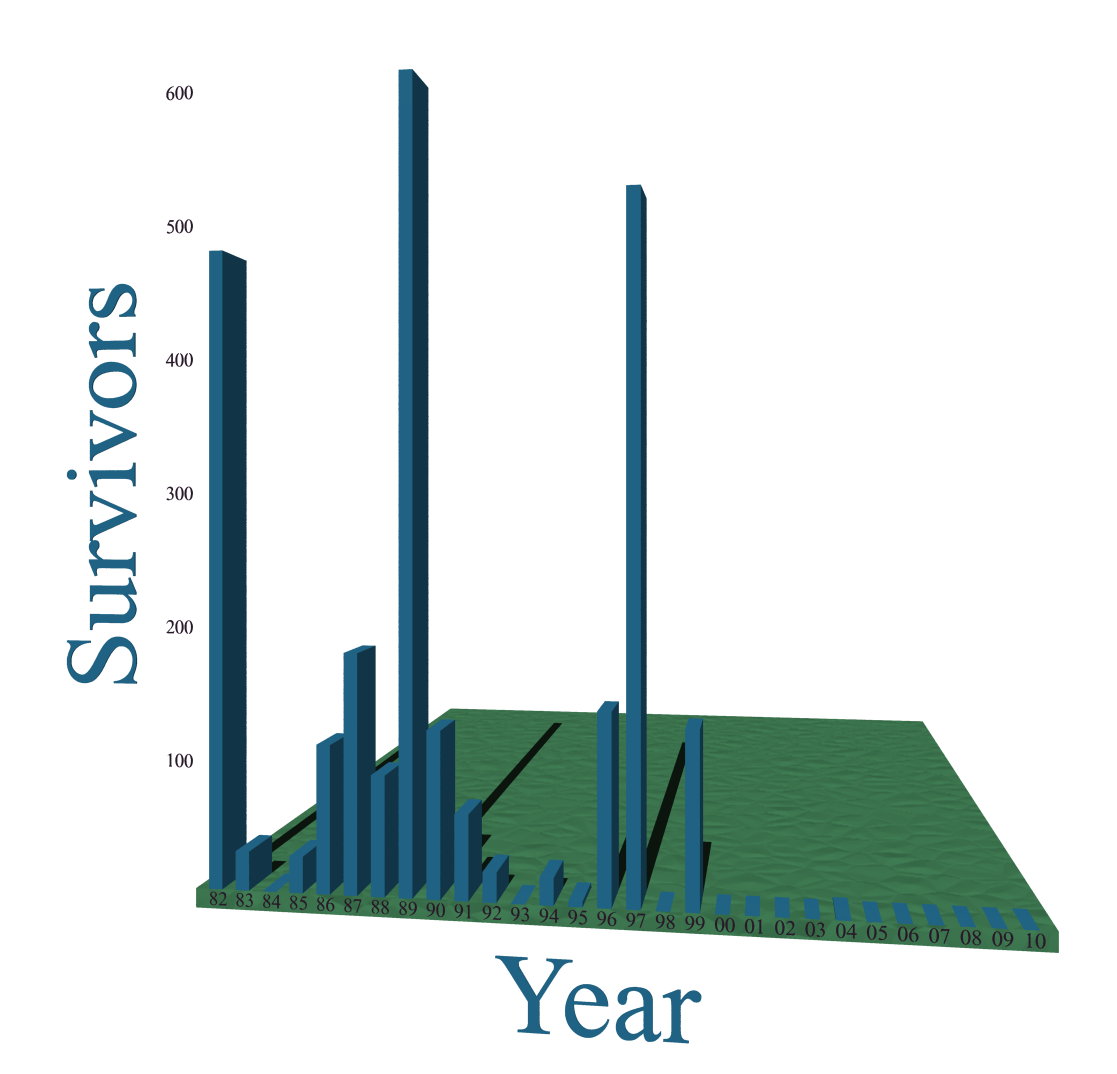

It was strange—pundits tended to get it right that everything would become a computer, and then assumed that using these ubiquitous single-purpose computers would remain like using the general-purpose computers of their day.

This is nuts, and it was nuts even then. I got a lot wrong about the future of computing as a teenager, but one thing I did recognize was that computer software would become commoditized. It’s the main reason I didn’t go into programming as a college degree: computer programming was going to be big business managed by committee and completely uninteresting as a career path. The future of programming was going to be IBM, huge soulless corporations as depicted later in The Matrix.

Computer models can do anything, including “show” that greater airline safety reduces the number of survivors of airline crashes.

Because I didn’t foresee the web, I was completely wrong about the latter, but the former is true. No one needs to program a computer to do their daily job. No one needs to program a computer to manage their recipes, books, social circle3, or household appliances. Nor should they. No one needs to be a programmer to start their bread machine, or write a letter, or drive their car to the grocery store. Their bread machine, their typewriter or phone, their car, and even the checkout lane are all computerized, but no programming is or should be necessary to use them.

At the beginning of the personal computer era, there was a thriving community not just of sharing programs but of sharing programming, through clubs, books, magazines, and the nascent network of bulletin board systems. This no longer exists—computer applications have become too complex, for the most part, for individual programmers to publish in print.

But programming should be part of everyone’s vocabulary, just like science, math, and English are learned by people who have no intention of going into the sciences, accounting, or literary criticism. Knowing how programming works is essential to being an informed citizen in a world where computers are the excuse for every failure and the backbone of every success. Not knowing this is just as bad as not knowing that all materials have their melting and burning point. That if eight ounces is $1.00, a pound should be $2.00 or less. And that the truths of Fahrenheit 451•, Animal Farm, and Macbeth endure.

Literacy is not just about being able to read. It’s about knowing how to interpret what you read. Computer literacy ought to be about more than just knowing that computers exist and how to move a mouse around. Ignorance about computer programming mystifies life. It turns a straightforward process no different from baking a cake into a religion4 complete with its own dogma and priesthood.

Programming, even simple weekend programming, is the best way to learn that computers are not infallible. Knowing that programming is nothing more than a series of steps5 is extraordinarily important. That computer programs are created by flawed humans. That algorithms are at best approximations. And that it is simplicity itself to program a computer to provide an answer to any data, regardless if it’s the right answer.

No one who has programmed their own book, recipe, or music management system, or programmed a raytracing of a three-dimensional image, or even written a simple “hello world” program and seen how it fails, will ever trust complex data retrieval or visualization to be reliable and comprehensive.

A person who has programmed their own computer—desktop, tablet, phone—to do something useful sees computers differently. Whether they created something as simple as a macOS Quick Action to sort and count a list of items or as complex as converting letter notes to music or creating ASCII art from photos, they will see the computer as a tool rather than an oracle, and a fallible tool at that.

The greatest benefit Apple has provided to the world is making it easy to program everything. From HyperCard stacks to AppleScripts to Quick Actions to iOS Workflows to including major scripting languages on the macOS command line, they all teach what computers ought to be able to do, and they also all teach what kinds of failures computers enable. The most malicious thing Apple does to the world is hide these features or cut them off when they become popular among non-professionals. As if programming is for an elite subset of their customers and not for all of them.

The tendency to see programming as the oracular pastime of a technological priesthood is harmful to public discourse and to cultural progress in general. It tends to remove from public discourse exactly those things that we should be discussing: the relative fallibility and accuracy of our models and our data. It places that discussion in the hands of an expert class that is invested in ignoring the assumptions and tradeoffs built into their tools. An expert class that any objective analysis would show is far from expert in public policy regardless of how proficient they might be in their particular field.

Computer programs, data, and models are the opposite of written in stone; they are changeable, malleable, and subject to the whims of the age. Computers, computer programs, and computer networks are as fallible as the humans who create and use them. Their content should always be questioned, should always be weighed in light of the assumptions and data behind the program.

All programming is about choices. The choices made in programming should always be viewed in light of the tradeoffs and compromises inherent in any choice.

They should always be considered in light of “what can this tool do to propel me, my family, my community forward while keeping in mind its flaws and its blind spots?” And “What are the assumptions behind this data or this model? What tradeoffs has the programmer ignored?”

Even when the question can't be answered, it is critical to know that the question exists.

In The Cartoon Guide to the Computer•, page 118. He was joking, as he often is. I hope.

↑From The Joy of Computers•, page 151. It’s a fascinating look at the future of computers seen from 1983.

↑I wonder, writing that, if the decline of cooking and baking is partially responsible for the rise of a foodie culture that often veers extremely close to religious wars. Whether a hot dog is a sandwich, or whether pineapple disqualifies pizza, is not a disagreement that deserves arguing over.

↑Even if it might in real life be a parallel series of steps, or triggered on some input or timer.

↑

English literature

- Animal Farm

- Animal Farm is billed as “a provocative novel”, but that just underestimates our ability to be completely blind when faced with uncomfortable ideas.

- Fahrenheit 451•: Ray Bradbury (paperback)

- Ranks up with Animal Farm as one of the best social satires in the English language. “Guy Montag is a book-burning fireman…”

- Macbeth: William Shakespeare at Internet Archive

- “A new working edition, with plot scheme and questions for intensive study, together with a reprint of Act III from a restoration version for purposes of comparison.”

programming for all

- 42 Astoundingly Useful Scripts and Automations for the Macintosh

- MacOS uses Perl, Python, AppleScript, and Automator and you can write scripts in all of these. Build a talking alarm. Roll dice. Preflight your social media comments. Play music and create ASCII art. Get your retro on and bring your Macintosh into the world of tomorrow with 42 Astoundingly Useful Scripts and Automations for the Macintosh!

- Astounding ASCII Art

- ASCII art images created using the asciiArt script of 42 Astounding Scripts and Automations for the Macintosh.

- The Cartoon Guide to the Computer•: Larry Gonick

- The author of “The Cartoon History of the Universe” has done it again with the computer.There’s too much emphasis on computer programming (he includes a page on each of the major BASIC language commands) but most of the information is either about the history of computers or the heart of what makes computers tick. You don’t need this book to switch your computer on. But if you’d like a better understanding of why some things always happen, such as why the numbers 256, 512, and 1024 keep popping up, you’ll not find a more entertaining way to learn.

- Music Hall

- Source files for the Astounding Scripts player piano.

- Persistence of Vision tutorial

- A step-by-step tutorial, available under the Gnu Free Documentation License, on using the Persistence of Vision raytracer.

technology policy

- Innovation in a state of fear: the unintended? consequences of political correctness

- Is political correctness poised to literally kill minorities as it may already have killed women, because scientists avoid critical research in order to avoid social media mobs?

- The Joy of Computers•: Peter Laurie (hardcover)

- A fascinating look at the state of computer technology in 1983.

- King Ludd

- There are some decisions that should be left to experts, and some that should not. It makes sense that if you leave acquisitions to experts, they will recommend acquiring what they’re familiar with. And if you acquiesce to a popular vote only among experts, they will naturally choose a course of action that requires more experts.

- The plexiglass highway

- Government bureaucracies can cause anything to fail, even progress.

- U.S. airline fatalities plummet in 2010

- There were no airline fatalities last year. Experts credit statistical lull. Others cite strange anomalies in the data.

More programming for all

- 42 Astoundingly Useful Scripts and Automations for the Macintosh

- MacOS uses Perl, Python, AppleScript, and Automator and you can write scripts in all of these. Build a talking alarm. Roll dice. Preflight your social media comments. Play music and create ASCII art. Get your retro on and bring your Macintosh into the world of tomorrow with 42 Astoundingly Useful Scripts and Automations for the Macintosh!

- Internet and Programming Tutorials

- Internet and Programming Tutorials ranging from HTML, Javascript, and AppleScript, to Evaluating Information on the Net and Writing Non-Gendered Instructions.

- Our Cybernetic Future 1972: Man and Machine

- In 1972, John G. Kemeny envisioned a future where man and computer engaged in a two-way dialogue. It was a future where individual citizens and consumers were neither slaves nor resources to be mined.

- No premature optimization

- Don’t optimize code before it needs optimization or you’re likely to create unoptimized code.

- Smashwords Post-Christmas Sale

- Smashwords is having a sale starting Christmas day, and both Astounding Scripts and The Dream of Poor Bazin are 75% off.

- Two more pages with the topic programming for all, and other related pages

More technology policy

- Macs still easier to use?

- Twenty years down, does buying a Macintosh still save help desk time and user trouble? According to IBM, it does.

- Copyright reform: Republican principles in action?

- Their initial copyright policy brief was a brilliant example of how Republicans could tie small government and freedom to actual, concrete policy changes that will help the average person—while at the same time cutting the rug from under their traditional anti-freedom enemies. It was far too smart to last.

- Health care reform: walking into quicksand

- The first step, when you walk into quicksand, is to walk back out. Health providers today are in the business of dealing with human resources departments and government agencies. Their customers are bureaucrats. Their best innovations will be in the fields of paperwork and red tape. If we want their innovations to be health care innovations, their customers need to be their patients.

- All roads lead up

- Whatever happened to programming? It became more interesting.

- The presumption of ignorance

- From movie theaters to classrooms to jury rooms, there’s an assumption that forced ignorance is possible. But it isn’t, it never has been, and it’s only going to get more obvious.

- 13 more pages with the topic technology policy, and other related pages